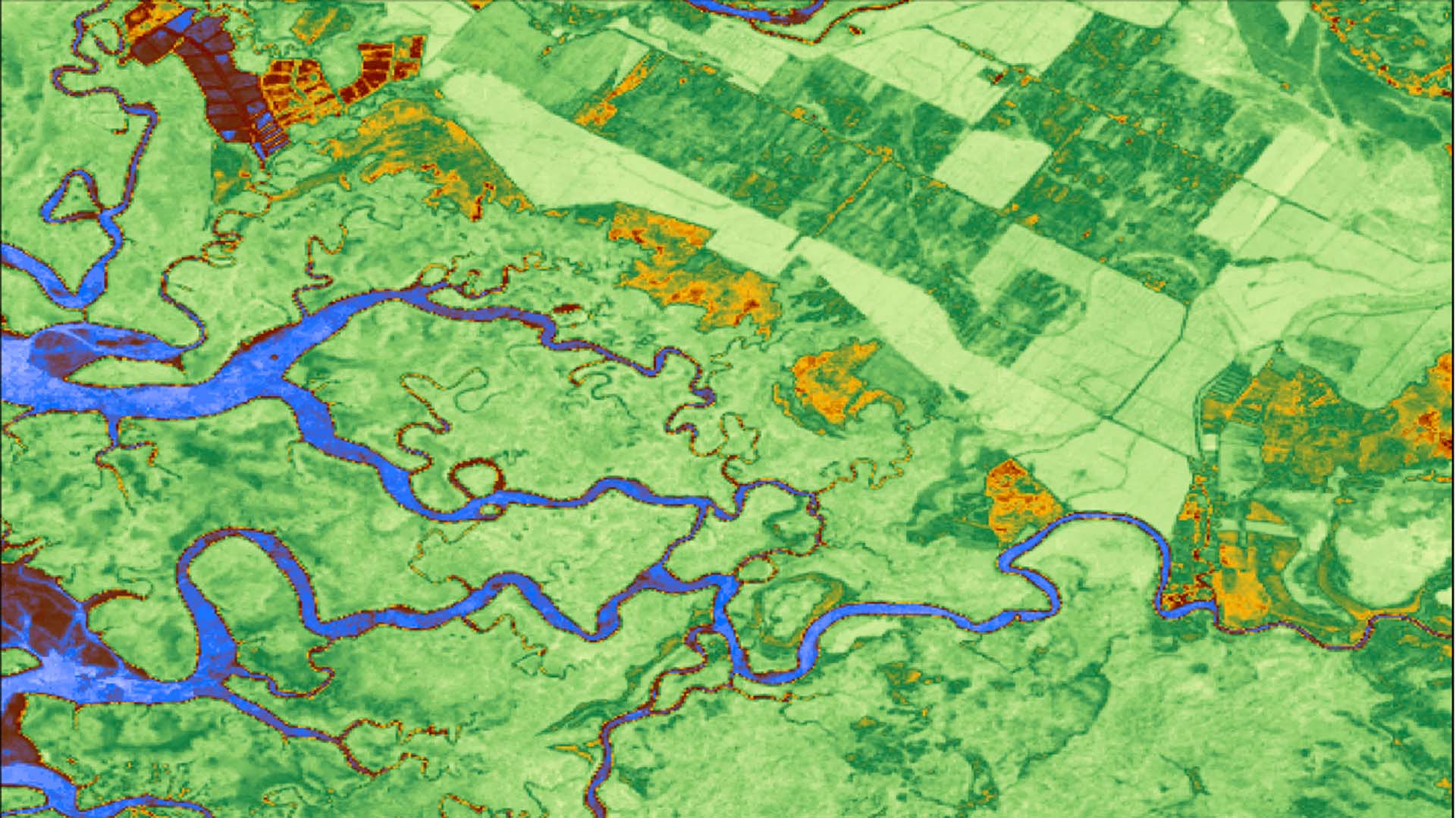

Accurate land-cover maps are the backbone of effective conservation and climate policy. Policymakers depend on them to track initiatives like 30×30, companies use them to report on nature-related risks, and scientists monitor carbon sinks such as forests, wetlands, and mangroves. An example: Wetlands alone store about 20% of Earth’s organic carbon while covering only 1% of its surface. Missing or misclassifying them can derail strategies before they even start.

Dr. Hamed Alemohammad, Director of the Center for Geospatial Analytics at Clark University, recently gave an executive briefing on recent scientific advances in this field, including new efforts to capture ecosystem composition at scale.

Dr. Alemohammad traced the evolution of mapping approaches—from early supervised classifications to modern machine learning systems and the emerging field of AI foundation models—clarifying what each can and cannot deliver. The webinar was hosted by Paul Bunje of Conservation X Labs and Karl Burkart of the Nature Data Lab at One Earth.

We recommend watching the full webinar (embedded above) to hear the discussion in its entirety, along with wonderful questions from the audience. This article is designed as a companion piece—a structured summary of the main topics that Dr. Alemohammad covered, including the limitations of today’s global maps, the promise of foundation models, and the challenges of integrating AI into conservation practice.

As Dr. Alemohammad emphasized:

“All users—no matter their metric—require better, more accurate, higher-quality maps.”

Despite decades of progress, today’s global land-cover products share stubborn weaknesses.

These issues are frustrating for scientists, sure, but they have real consequences. They can misdirect conservation funding, undermine regulatory compliance, and leave vulnerable ecosystems overlooked.

In Murang’a County, Kenya, three leading global products disagreed on where croplands and built areas were located. Local agencies couldn’t rely on any of them for agricultural planning.

The fix came through a teacher–student model: experts labeled high-resolution commercial imagery to train a “teacher,” which then guided a “student” model built on open Sentinel-2 data. The result was a sharper, more accurate map now in use by local decision-makers.

This case demonstrates the lesson: global AI needs local grounding.

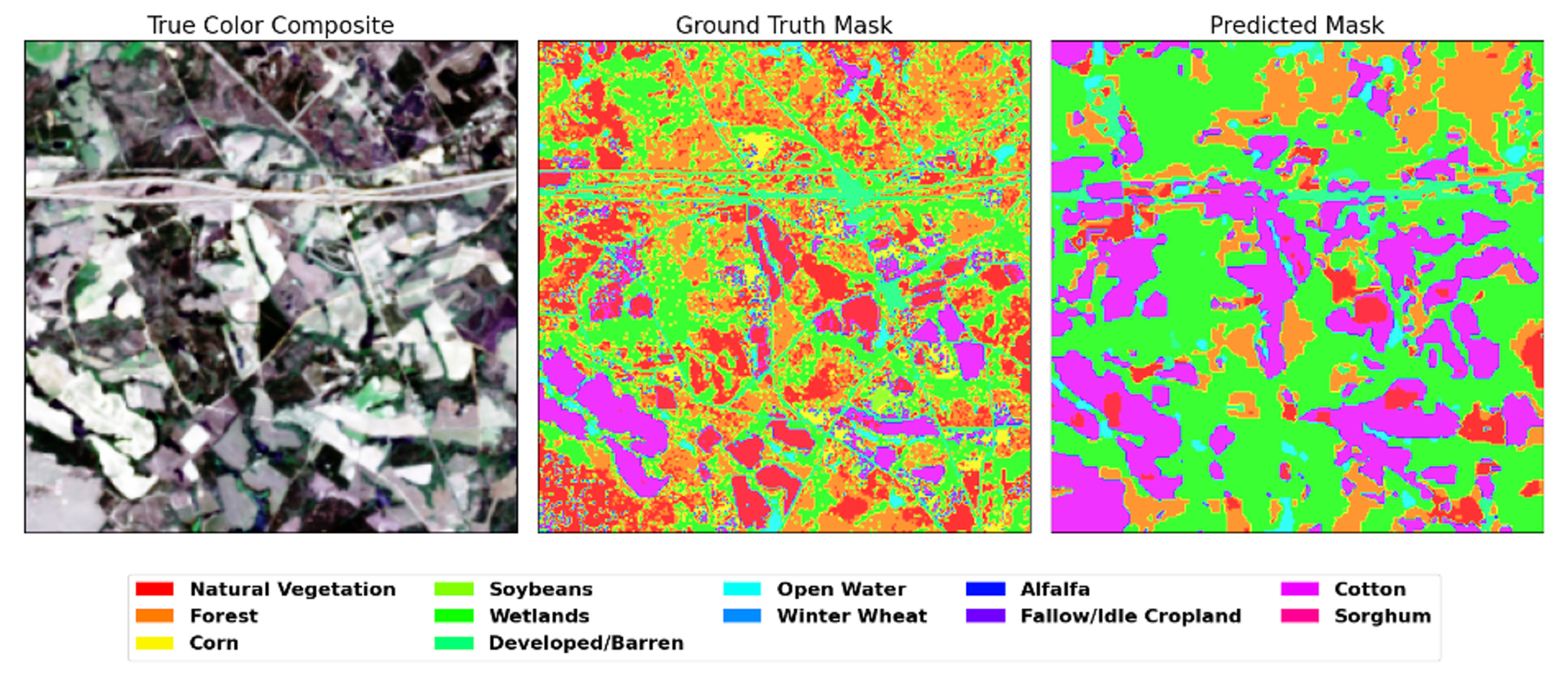

Above: Crop classification prediction generated by NASA and IBM’s open-source Prithvi Geospatial artificial intelligence model.

Foundation models (FMs) represent a major shift in geospatial AI. Trained on vast satellite archives using self-supervised methods, they learn general “representations” of the Earth that can later be fine-tuned for specific tasks.

“In the end, these models are not the map. They are just another tool that help us do the mapping better.”

Several Earth observation foundation models are now available:

Each has strengths and weaknesses, but all share the principle: they’re starting points, not decision-ready maps.

One of the thorniest challenges is reconciling the need for standardized global reporting with local ecological definitions. Regulators want comparability; practitioners need context.

A promising solution is hierarchical taxonomies:

This approach prevents “one-size-fits-all” categories from erasing local reality.

AI foundation models are powerful accelerators, but they’re not magic bullets. Real-world value depends on pairing them with local data, expert validation, and policy frameworks that embrace iteration.

Priorities for the next stage include:

“It is not a purely technological question. This is an AI-human collaboration problem.”

We’re at an inflection point. Foundation models like Prithvi-EO and AlphaEarth won’t hand us perfect maps, but they can make land-cover mapping more accurate, faster, and more scalable than ever before. Combined with expert insight, local context, and regulatory clarity, they can help conservation finally “put nature on the map”—in ways that drive real, measurable action.